Thursday, September 11, 2025

NPM new supply chain attack: Lessons learned

Introduction

This post is a brief reflection on the lessons learned from the attack on a series of NPM packages.

I regularly work with TypeScript, which meant that many, if not all, of my projects were affected.

The incident highlighted several improvements I needed to implement. Here I share how I discovered the issue, the challenges I faced to mitigate the attack, and the key lessons learned throughout the process.

Context

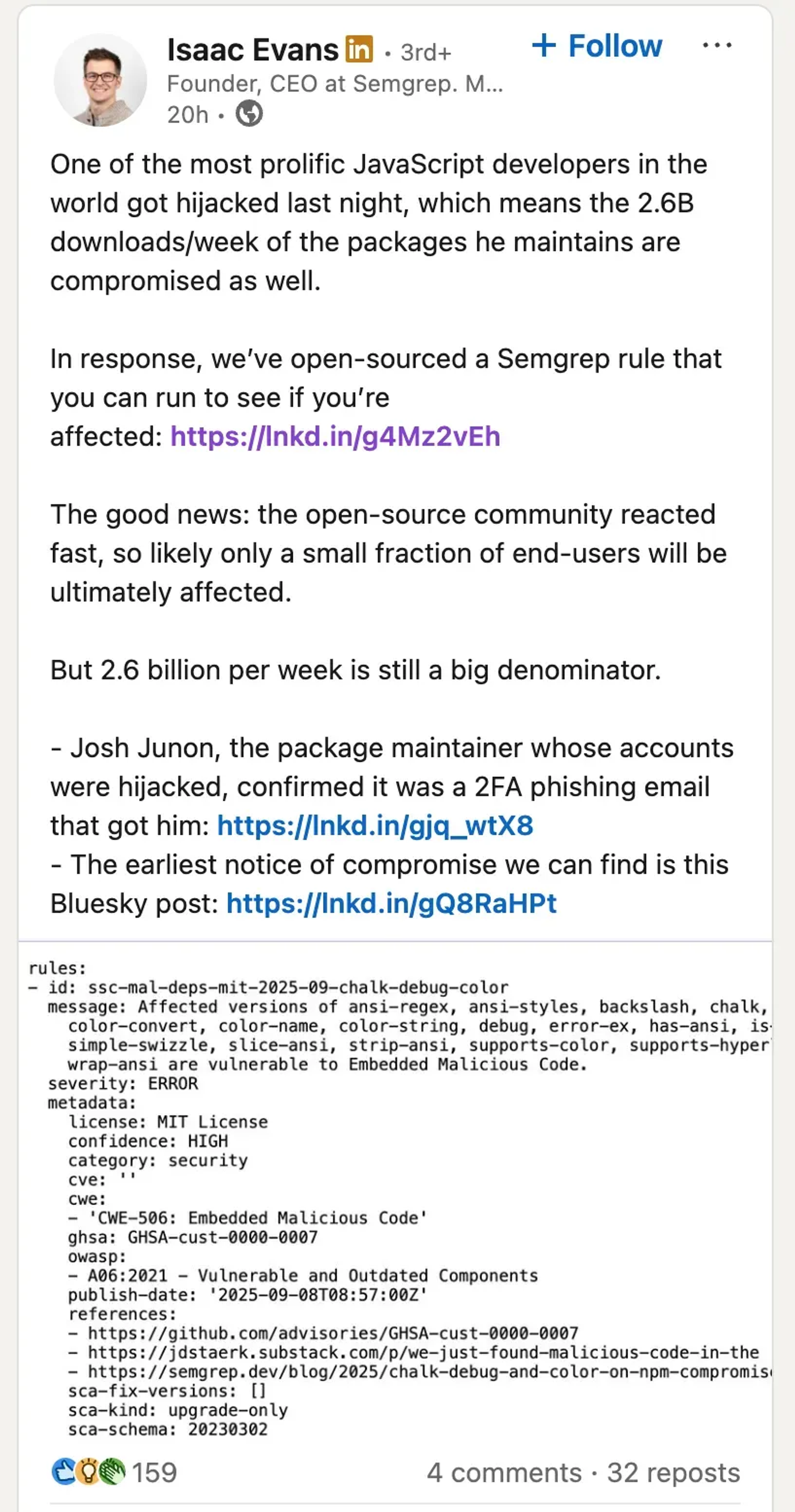

On Monday, September 8, the npm account of a well-known developer, qix, was compromised. This led to the publication of malicious versions of several high-impact NPM packages.

The infected packages contained a cryptoclipper designed to steal funds by swapping wallet addresses and hijacking cryptocurrency transactions at the network layer.

Why does this matter?

To put the impact into perspective, the combined weekly downloads of the compromised packages exceed one billion.

This poses a serious threat to the JavaScript ecosystem. Injecting malicious code into such widely used packages is far from trivial.

But what does this mean for those of us who rely on these packages?

Let’s break it down.

How did I notice it?

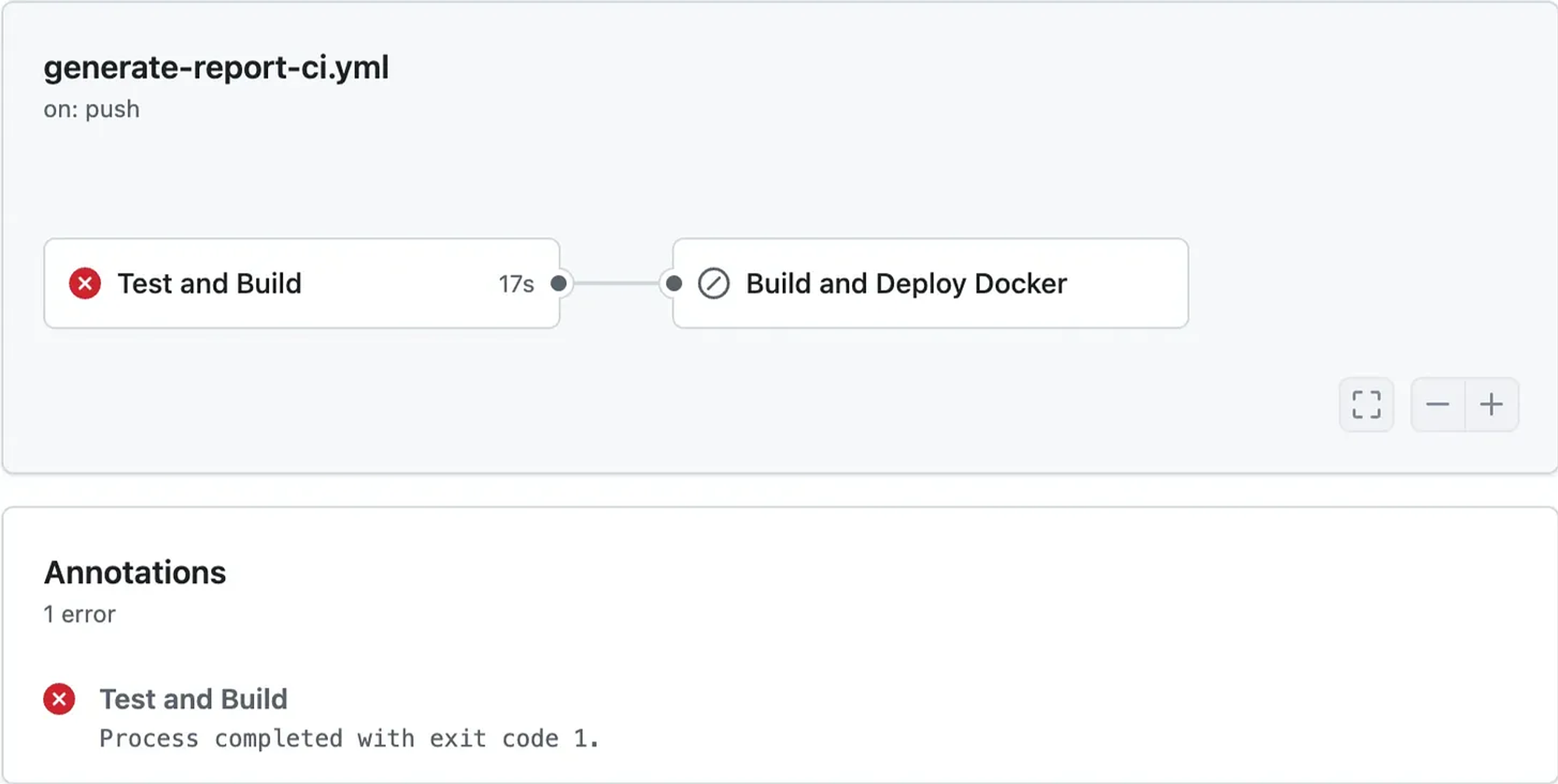

While pushing some minor changes to GitHub, I noticed that my GitHub pipeline failed.

At first, I was puzzled. I asked myself why such a small change would cause the tests to break. A minor update shouldn’t have that much impact, but you never know.

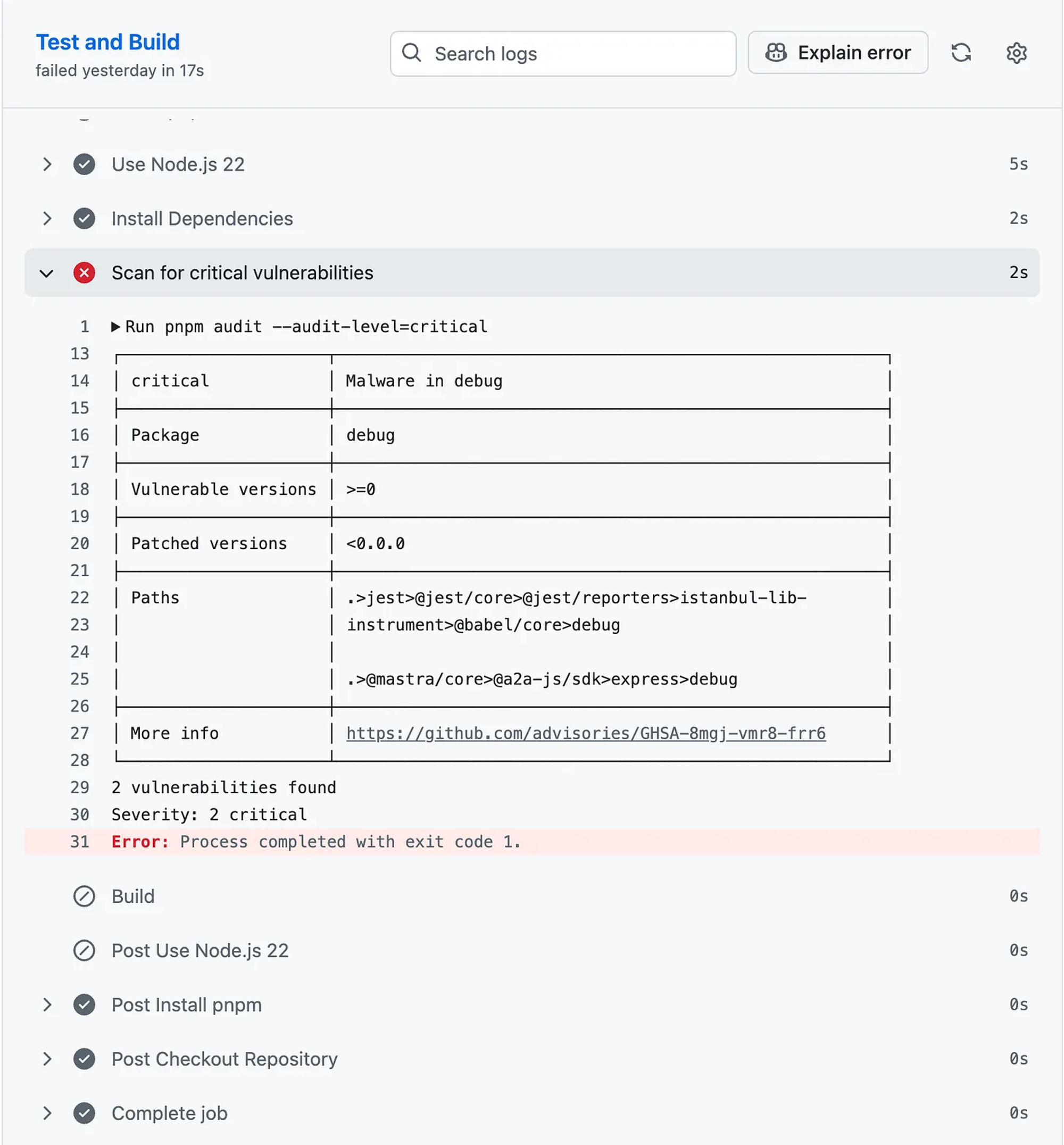

I was even more surprised when I saw the issue came up during the project audit, and worse, it was flagged as a critical vulnerability.

“Malware in debug.” Ok, now you have my attention.

What should I do now?

Fortunately, this isn’t the first time I’ve faced a compromised project in a professional setting. It’s never something you want to deal with, and I wouldn’t call it pleasant, but I already had a sense of how to respond.

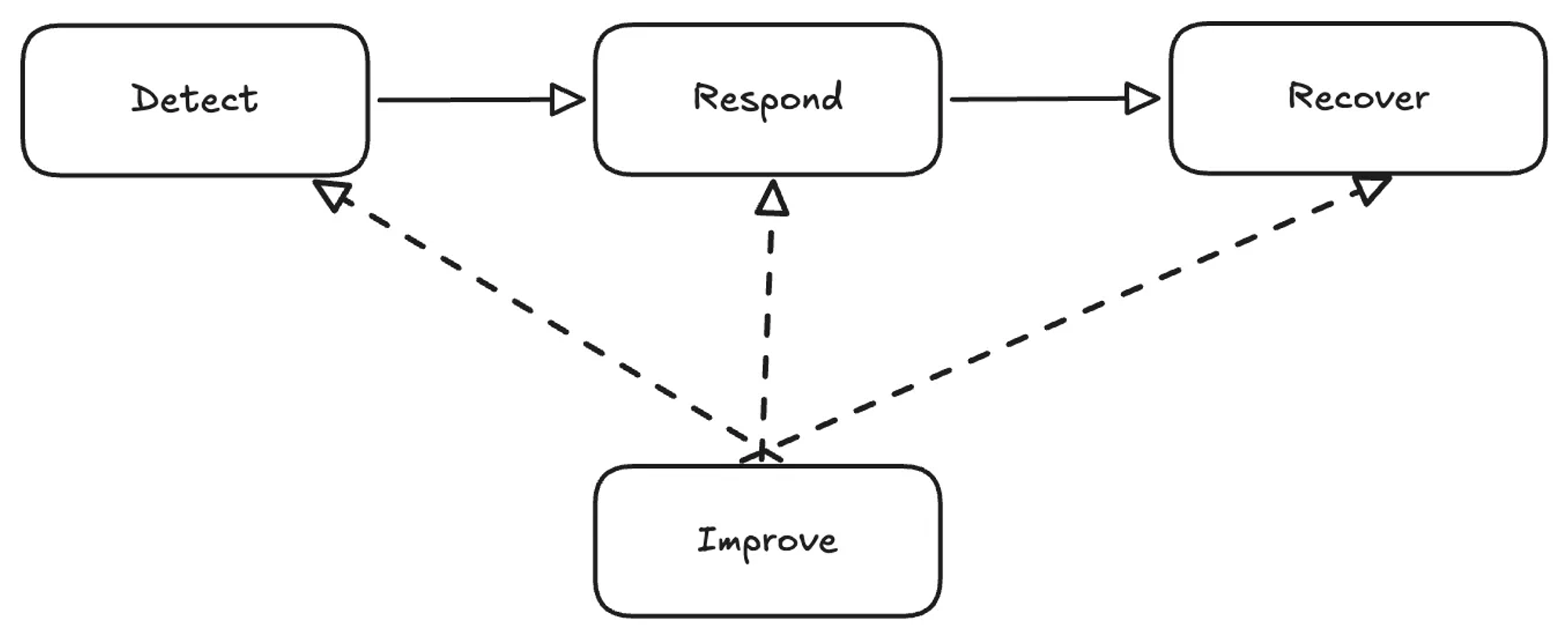

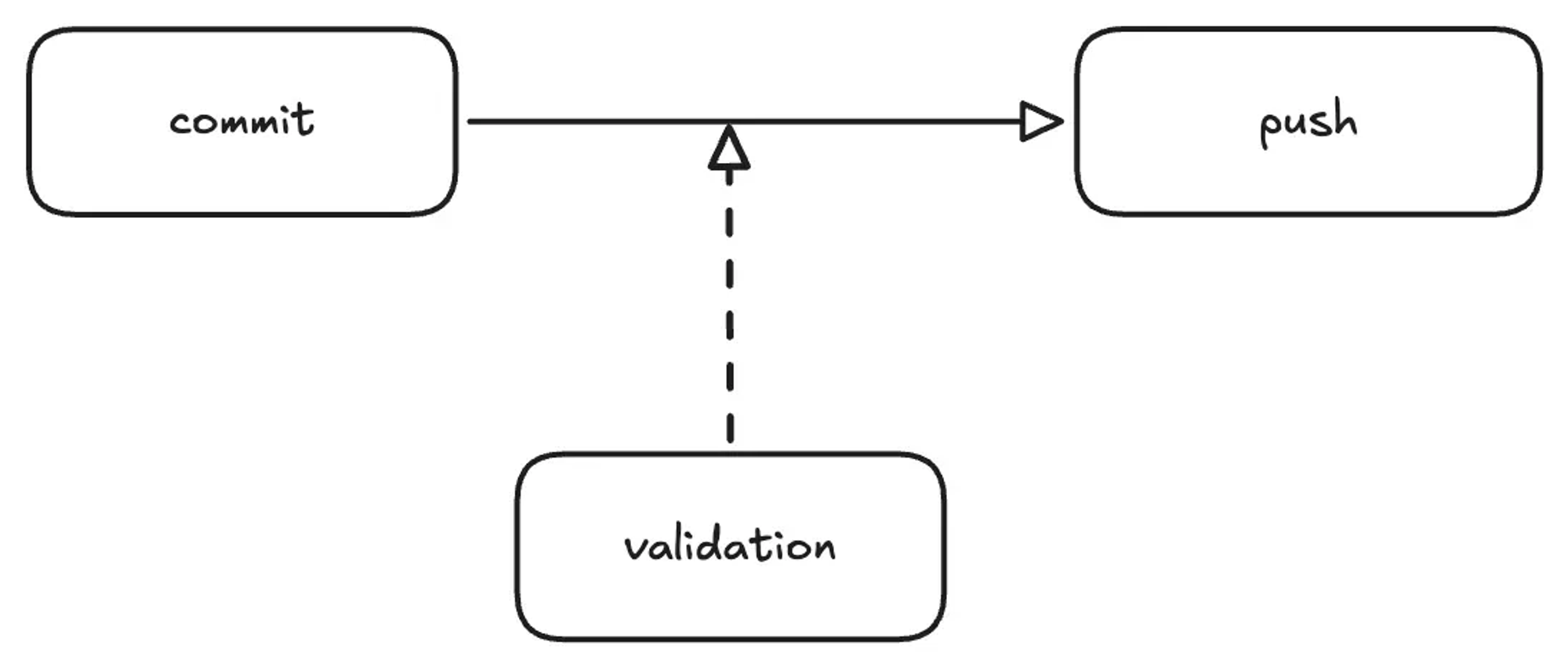

Here’s a high-level diagram of what I had in mind.

The previous diagram is a simplified version of a more robust process. If you’re interested, you can find more details in the following link.

Understanding what happened

To figure out how to respond, I first needed to understand what was going on.

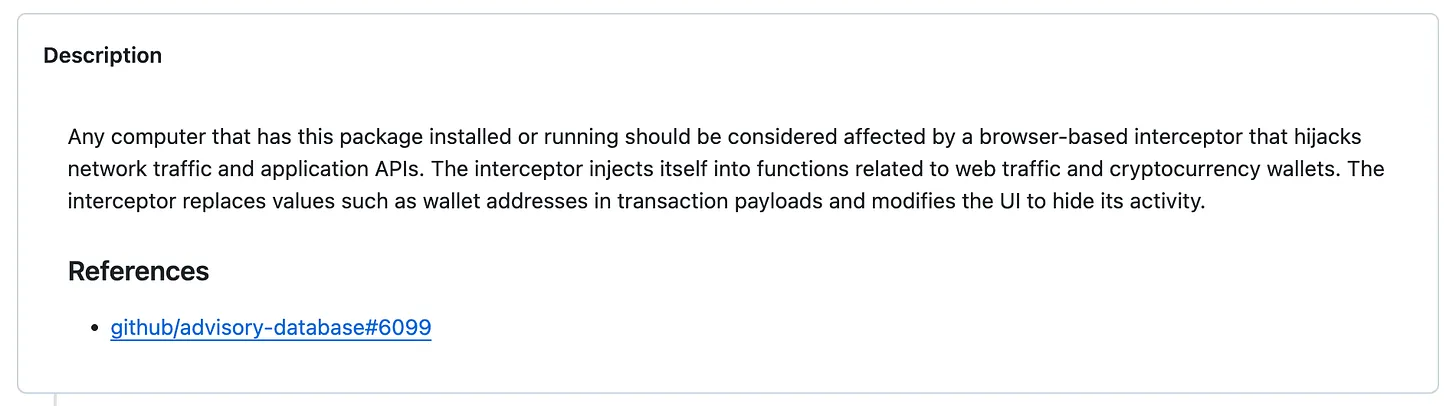

As I dug deeper and started reading the vulnerability details…

Any computer that has this package installed or running should be considered fully compromised. All secrets and keys stored on that computer should be rotated immediately from a different computer. The package should be removed, but as full control of the computer may have been given to an outside entity, there is no guarantee that removing the package will remove all malicious software resulting from installing it.

Alright, now you definitely have my attention.

Credential rotation

My first thought was to rotate the API credentials that might catch the eye of any intruder, OpenAI.

Most of the credentials I use already have short lifespans and are restricted to my IP, something I was grateful for at that moment.

However, I quickly realized I didn’t have a unified record of what needed to be rotated locally. My bad.

Community Support

When I started rotating credentials, I reached out to a few people for suggestions on what else I should do.

Several colleagues shared useful advice, and I also received suggestions about tools that, honestly, I wish I had in place already.

I had already been asking around in different places—mainly Discord communities, GitHub, LinkedIn, Reddit, and so on.

On LinkedIn, I came across information on how to determine whether I was compromised. That was particularly useful to understand the actual scope of the problem across the machines I manage.

You can run the following to check if you have the malware in your dependency tree:

rg -uu --max-columns=80 --glob '*.js' _0x112fa8

Requires ripgrep:

brew install rgAmong other resources.

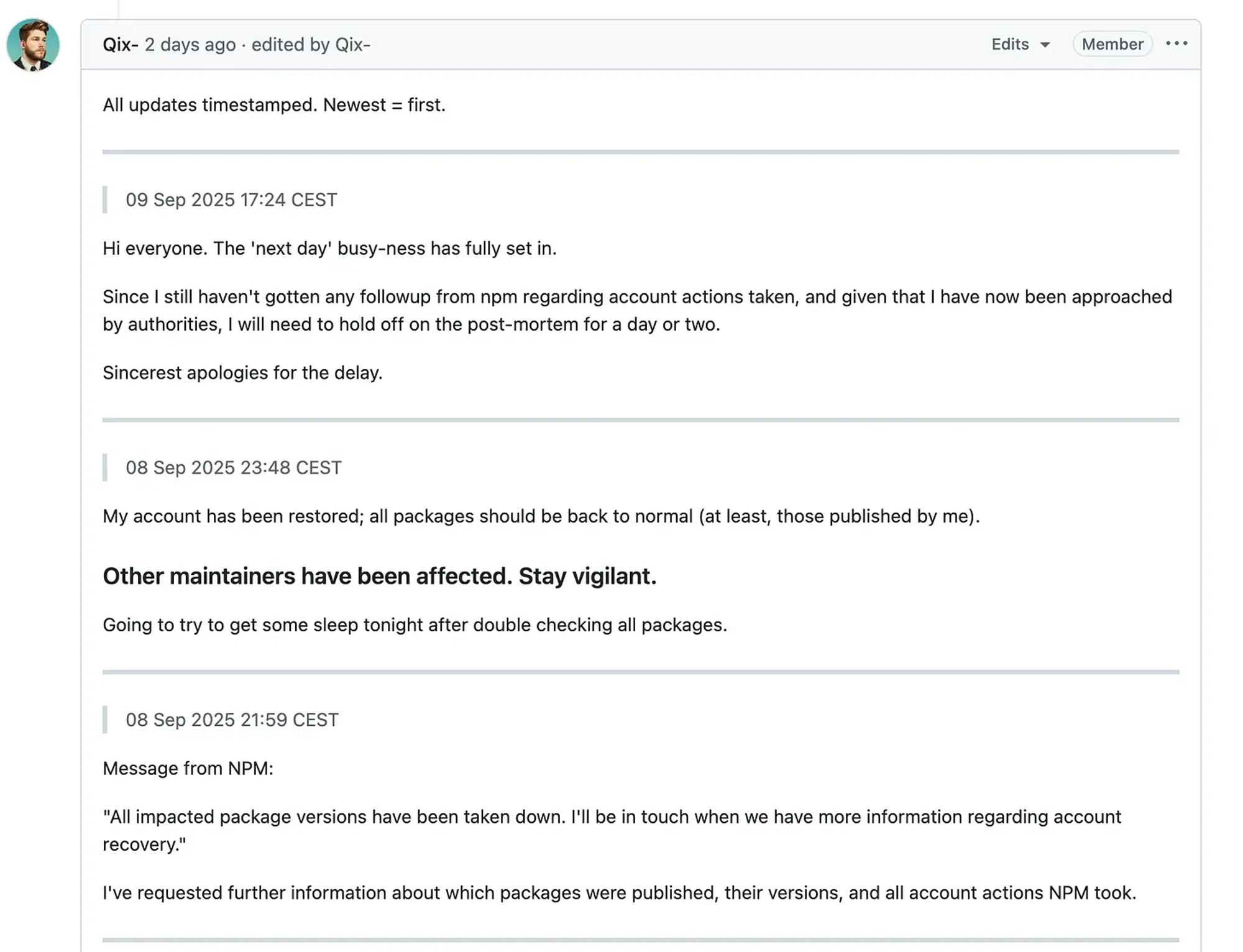

After a couple of hours, official updates started coming in confirming that the affected NPM packages had been removed.

Additionally, updates began arriving for the packages I had that were compromised.

Eventually, with official confirmation that all compromised repositories had been addressed, I began looking at how to improve my current setup.

Continuous Improvement

One thing I’m grateful for is that I caught this issue before it reached production. Still, I feel I could have detected it much earlier.

Shift Security Left

A best practice in the broader Software Development Lifecycle (SDLC) is to integrate security testing in the early stages of development.

From my experience, I’ve incorporated audits into CI/CD pipelines. However, I currently use ACT manually, and anything manual is subject to being skipped.

That particular day happened to be one of those times. This highlighted the need to establish an automated validation process that can detect anomalies before a push.

It’s important that this process doesn’t disrupt the development flow, so I’m considering implementing it post-commit.

The final workflow should ideally look like this.

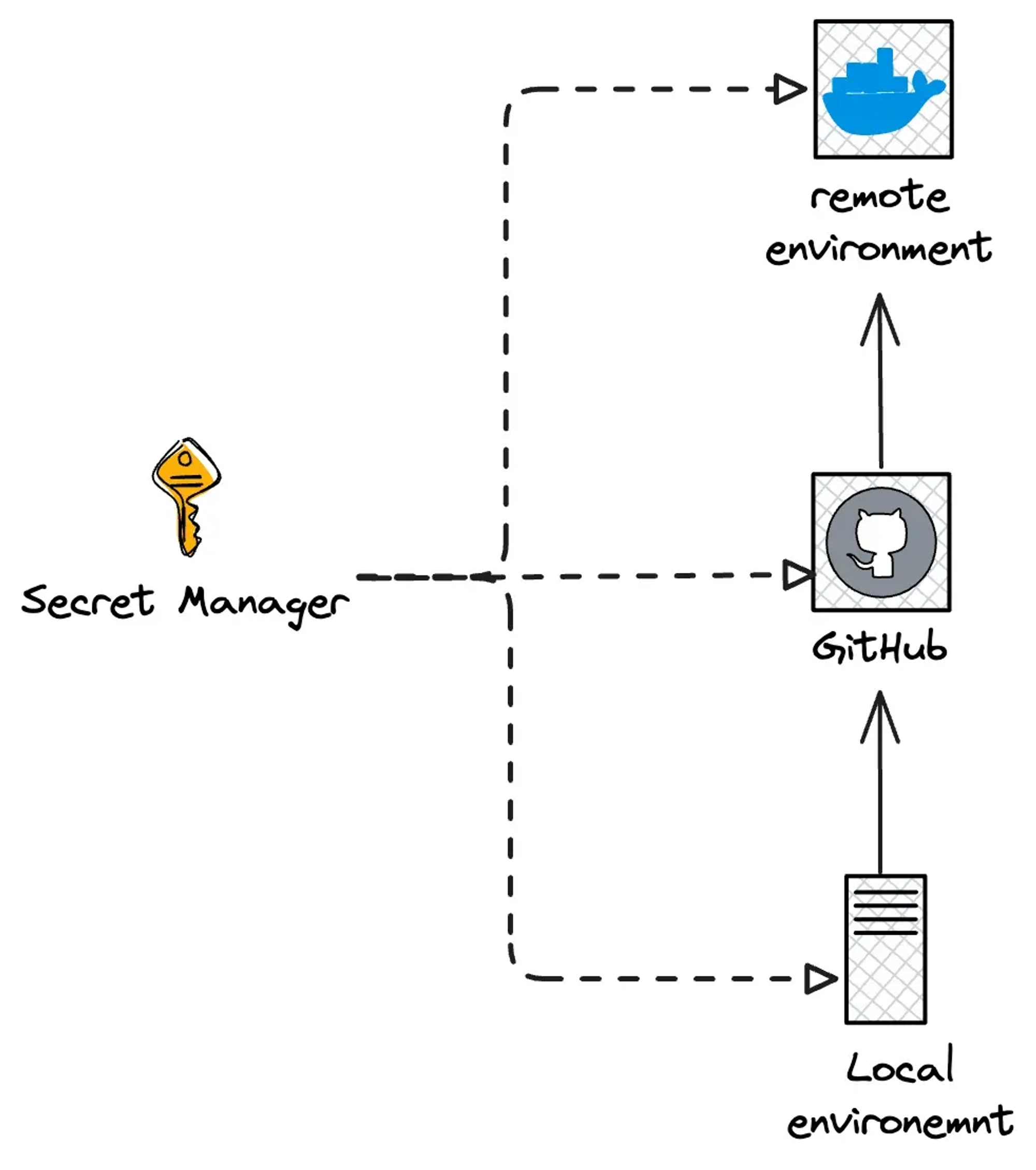

Secret Management

One consequence of having to rotate credentials after an incident is that it’s done in response to a real event, a potential threat, rather than as a regular practice.

In my daily workflow, I use several tools for password and secret management, such as 1Password, AWS Secrets Manager, Vault, you name it. But rotating credentials everywhere under the pressure that they might be compromised adds a whole new level of stress. It was a tedious process.

I would’ve liked the process to be unified, which isn’t something novel, and the suggestion to use Infisical seems excellent. This will allow me to stop injecting secrets manually, which was another red flag, and have centralized control.

The final workflow should ideally look like this.

I have several other improvements in mind, but for the sake of brevity, I’ll end the post here.

Conclusion

In the context of this incident, I deeply appreciate the effort of the DevSecOps community in educating those of us who work with software and helping us find the balance between taking security seriously and maintaining an uninterrupted development flow.

It may sound trivial, but implementing the right security mechanisms without affecting lead time or deployment frequency is not an easy task.

For this incident, it made sense to integrate security testing early in the development process and automate it. Then, by keeping a high deployment frequency, I was able to run these security tests regularly.

I also cannot miss the opportunity to recommend, as always, reading Accelerate, a book that explains what changes organizations should make to improve their software delivery performance. Many of the lessons I’ve learned throughout my professional career are very well explained in this book. Without a doubt, it’s an invaluable and timeless source of information.

Resources

- https://jdstaerk.substack.com/p/we-just-found-malicious-code-in-the

- https://github.com/debug-js/debug/issues/1005#issuecomment-3266868187

- https://github.com/mastra-ai/mastra/issues/7604#issuecomment-3268221934